10.1 - Introduction to Surface Properties¶

Your eyes “see” an object because light has reflected off of the object into your eye. The properties of a surface affect how light rays reflect off of an object.

This lesson discusses the basic properties of surfaces and the mathematics used to calculate the interaction of reflected light and a surface.

Overview of Lessons¶

In the previous lessons you learned how to model light and its reflection off of surfaces. Wasn’t it amazing how much better the renderings looked when the color of the pixels was based on reflected light! However, we used a single color to represent a model’s appearance which made the rendering of the models too uniform and “pure.” Real-world objects have more variation over their surfaces. Modeling the surface properties of a model’s triangles is where we can approach photo-realism in WebGL computer generated images. (To get exact “photo-realism” you must use ray tracing, which is a totally different way to render 3D computer graphics images.)

Surface properties are complex and we will not be able to cover every possible property that graphics programs might use. But we will cover the basics, which are:

- Color: The color of a surface is actually not a single color, but a set of colors because a surface actually reacts differently to ambient, diffuse and specular light reflection.

- Orientation: WebGL only renders triangles, but we can modify the normal vectors at a triangle’s vertices to simulate curved surfaces.

- Texture: A surface can have multiple colors. A good example is a piece of clothing.

- Smooth vs. Bumpy: A surface may not be smooth, but rather bumpy across its surface.

- Shiny vs. Dull: A surface might be irregular at a microscope level and appear “dull” because it reflects light in all directions, even for specular reflection. Or it might be very smooth at a microscope level and specular reflection has minimal scattering.

Overview of Code¶

Your JavaScript code will set up the surface properties of a model and your GPU fragment shader program will use those properties to calculate a color for each fragment of a triangle.

There are many scenarios for structuring your surface property descriptions. The major question is whether all of the surfaces of a model have the same properties, or do individual surfaces have different properties. You need to consider these issues as you design your models and as you implement your shader programs. Remember, there are always trade-offs between memory usage and rendering speeds. The following table summarizes these issues.

| Model Surfaces: | Rendering: | Surface properties are defines as: |

|---|---|---|

| all surfaces have the same properties | one call to gl.drawArrays() for the entire model | uniform variables |

| individual surfaces have different properties | one call to gl.drawArrays() for each set of surfaces with the same properties | uniform variables |

| one call to gl.drawArrays() for the entire model | attribute variables |

Surface Property Transformations¶

As in the previous lessons, we will perform fragment shader calculations in “camera space” because:

- The GPU shader program knows the camera’s location and orientation without updating any uniform variables. (The camera is at the global origin and aligned with the global axes.)

- Having the camera at the origin simplifies some of the lighting calculations.

This means that any surface properties that are related to the location or orientation of a model must be transformed into “camera space.” This is straightforward in most cases, but problematic if your transformation includes non-uniform scaling. Note that a camera transformation never includes scaling – only rotation and translation. So the problem arises if your model transformation includes non-uniform scaling.

No Scaling or Uniform Scaling¶

If your model transformation includes translation, rotation, and/or uniform scaling, you can use your model-view transformation to convert a model’s normal vectors into “camera space”. Remember that vectors have no location, only magnitude and direction. Therefore, a vector’s homogeneous coordinate must be zero so that translation is never applied to it. The vector <dx,dy,dz> becomes <dx,dy,dz,0> before being multiplied by the model-view transformation.

Note that you can never use your projection-view-model transformation on model normal vectors. A projection matrix typically contains non-uniform scaling and puts vertices in a 3D space different from the camera view.

Non-uniform scaling¶

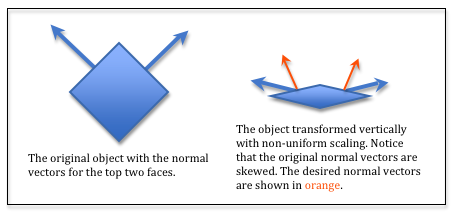

Non-uniform scaling.

Consider the diagram to the right. If you transform a model that includes non-uniform scaling, the normal vectors become skewed and do not point in the correct direction. This simple example shows that the model-view transformation used on the vertices of a model does not work for the normal vectors of the model – if the transformation includes non-uniform scaling.

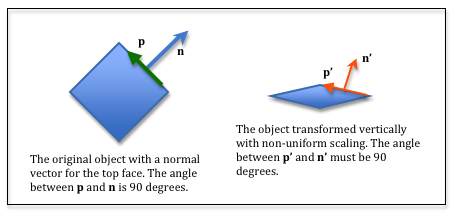

Non-uniform scaling.

Let’s calculate a transformation that will transform the normal vectors correctly. Assume we have a vector, p, that lies in the plane of the top face. The angle between p and n must be 90 degrees. That means the dot product of p and n, which is equal to the cosine of the angle between them, must be zero. Therefore,

dot(p,n) === 0

Let M be the transformation that contained the non-uniform scaling of the model. Therefore p' = M * p. We need to solve for a different transformation, S, that will transform n into n’ such that dot(p',n') === 0. Therefore,

dot(M * p, S * n) === 0

You can reorder the multiplication of a matrix times a vector by taking the transpose of each one like this:

e

i

m

b

f

j

n

c

g

k

o

d

h

l

p

*x

y

z

0

=x

y

z

0

*a

b

c

d

e

f

g

h

i

j

k

l

m

n

o

p

Eq2

MT * S === I(MT) -1 * MT * S === (MT) -1 * IS === (MT) -1S === (M-1) T (See the matrix identities below.)

Conclusion¶

If you use non-uniform scaling in your model transformations, your JavaScript code must create three separate transformations for a model rendering:

- A model-view transformation to transform the model’s vertices into “camera space”.

- A model-view inverse-transposed transformation to transform the model’s normal vectors into “camera space”.

- A projection-view-model transformation to transform a model’s vertices for the graphics pipeline.

For the remainder of these tutorials we will assume there is no non-uniform scaling in the model’s transformations.

Glossary¶

- surface properties

- Characteristics of a triangle that determines how light is reflected off of its surface.